Alliance for Digital Society

I carried out a study (23 interviews + desk research) for the Alliance for Digital Society – an organisation that unites multiple parties in order to realise digital inclusion. As Artificial Intelligence rather suddenly came into the spotlight in 2023, the Alliance wanted to have an insight in its effects on digital inclusion and digital citizenship. The objective of this study is to provide an informative overview of the situation and developments around AI from the perspective of digital inclusion and digital citizenship, enabling the Alliance and its partners to develop potential actions.

AI = system technology

After the launch of ChatGPT in November 2022 it looks like a big hype around AI. However, ChatGPT (and similar programs that are based on foundation models) is not the sole type of AI, speech recognition and computer vision were introduced earlier. The combination of these AI types will have a big impact in all walks of life: AI is a system technology, comparable to the combustion engine or electricity.

AI will help Digital inclusion for many but not all

In the Netherlands, a diverse group of in total an estimated three to four million people (on a total of 18 million) cannot sufficiently keep up with the digital world.

AI can indeed empower some of those groups. AI makes it possible to interact with Internet in different ways than typing and reading. It is and will increasingly become possible to interact with the internet through spoken dialogue, regardless of language. In the words of one respondent who I interviewed for this study: ‘away from the keyboard and away from English’. This will be of enormous help to people with difficulties in writing or reading and to people who are visually handicapped.

However, AI will not be of help to everyone of this group: there will be groups that will never participate in the digital world themselves because they cannot or do not want to. Thus, digital services should always offer human contact as an alternative.

Digital citizenship

What exactly is digital citizenship? In the report, I used a definition from the Rathenau institute, which distinguishes between the individual level (personal benefits), the community level (effects on community cohesion) and the political arena (participation and representation).

At the individual level, AI’s opportunities for digital citizenship lie in applications that assist people (e.g., as personal assistants) or enable new ways to generate computer code or images. For organizations, sectors, or communities, the opportunities primarily lie in applications that enhance efficiency, effectiveness, or customization. Finally, AI offers opportunities for social debate participation through deliberative democracy applications. While this sounds positive, there are significant downsides, as discussed next.

Downsides of AI: the four B’s

The downsides of AI can be summarized with four B’s:

- Bias: The datasets used by AI applications can have an unwanted bias, are often not transparent, are sometimes collected without respect for copyrights and sometimes contain privacy-sensitive data.

- Black box: Generative AI applications like ChatGPT, in particular, use billions of parameters that lead to outcomes that are not reducible and explainable and sometimes are outright nonsense. This is rather dangerous, as people tend to believe what the computer says – the so-called automation bias.

- Big Tech: Important parts of AI technology are in the hands of a few Big Tech companies. This near-monology makes public institutions increasingly dependent on Big Tech and threatens their digital autonomy.

- Bad actors: Bad actors can use AI applications to produce large amounts of disinformation and deepfake. Alas, AI is also used in cybercrime.

Responsible AI is the answer

The consequences of these downsides are negative effects on human rights and public values. Therefore, a lot of work is being done to include the so-called ELSA aspects [the Ethical, Legal & Societal aspects of AI] in the development, implementation, and use of AI applications. AI that meets the ELSA preconditions is called responsible AI.

A personal note

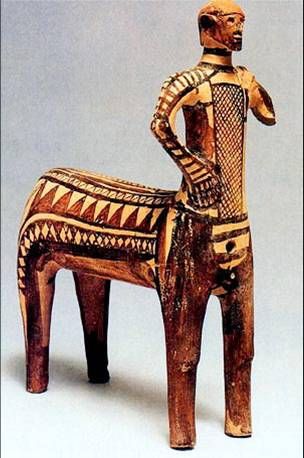

AI will certainly change our lives in the coming years. Our interaction with computers will transform profoundly, In this respect, I found the image of a Centaur particularly striking: the human should be the head, the computer the legs. However, with AI applications combined with the automation bias, it could become the other way around. With Uber, this is already the case: the Uber app is managing the drivers and to some extent also the passengers.